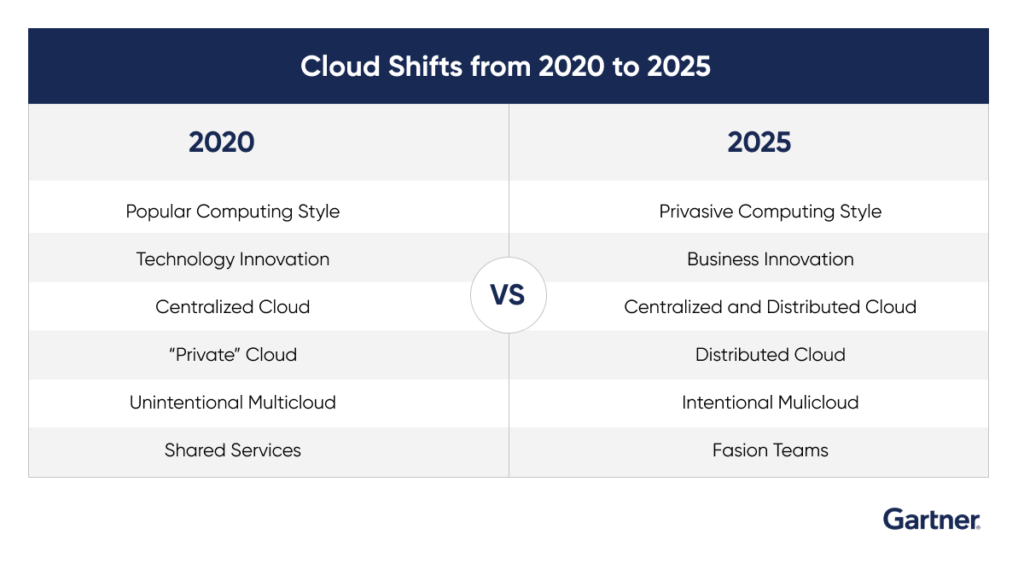

Traditional IT security is no longer what we’ve known it to be for the past few decades. There is a massive shift to cloud computing that has changed how we see and perceive IT security. We have grown accustomed to the ubiquitous cloud models, their convenience, and unhindered connectivity. But our ever-increasing dependence on cloud computing for everything also necessitates new and stricter security considerations.

Cloud security, in its entirety, is a subset of computer, network, and information security. It refers to a set of policies, technologies, applications, and controls protecting virtual IP addresses, data, applications, services, and cloud computing infrastructure against external and internal cybersecurity threats.

What are the security issues with the cloud?

Third-party data centers store the cloud data. Thus, data integrity and security are always big concerns for cloud providers and tenants alike. The cloud can be implemented in different service models, such as:

- SaaS

- PaaS

- IaaS

And deployment models such as:

- Private

- Public

- Hybrid

- Community

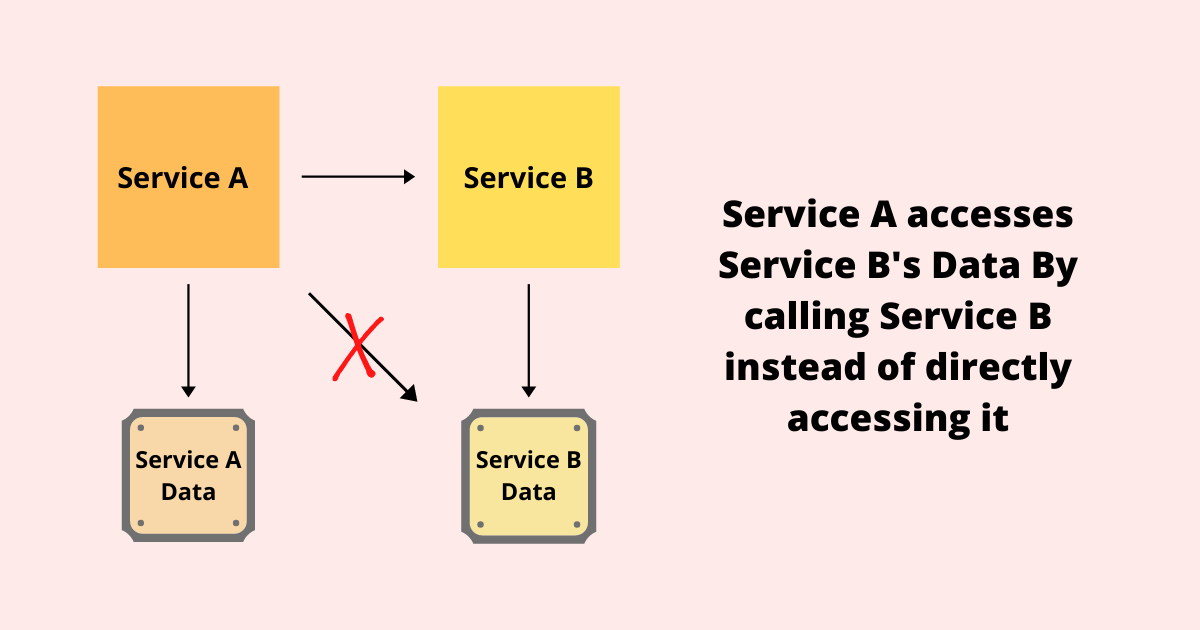

The security issues in the cloud fall into two categories. First, the issues that cloud providers (companies providing SaaS, PaaS, and IaaS) face. Second, the issues faced by their customers. The security responsibility, however, is shared, which is mentioned in the cloud provider’s shared security responsibility or shared responsibility model. This means that the provider must take every measure to secure their infrastructure and clients’ data. On the other hand, users must also take measures to secure their applications and utilize strong passwords and authentication methods.

When a business chooses the public cloud, it relinquishes physical access to the servers that contain its data. Insider threats are a concern in this scenario since sensitive data is at risk. Thus, cloud service providers do extensive background checks on all personnel having physical access to the data center’s systems. Data centers are also checked regularly for suspicious behavior.

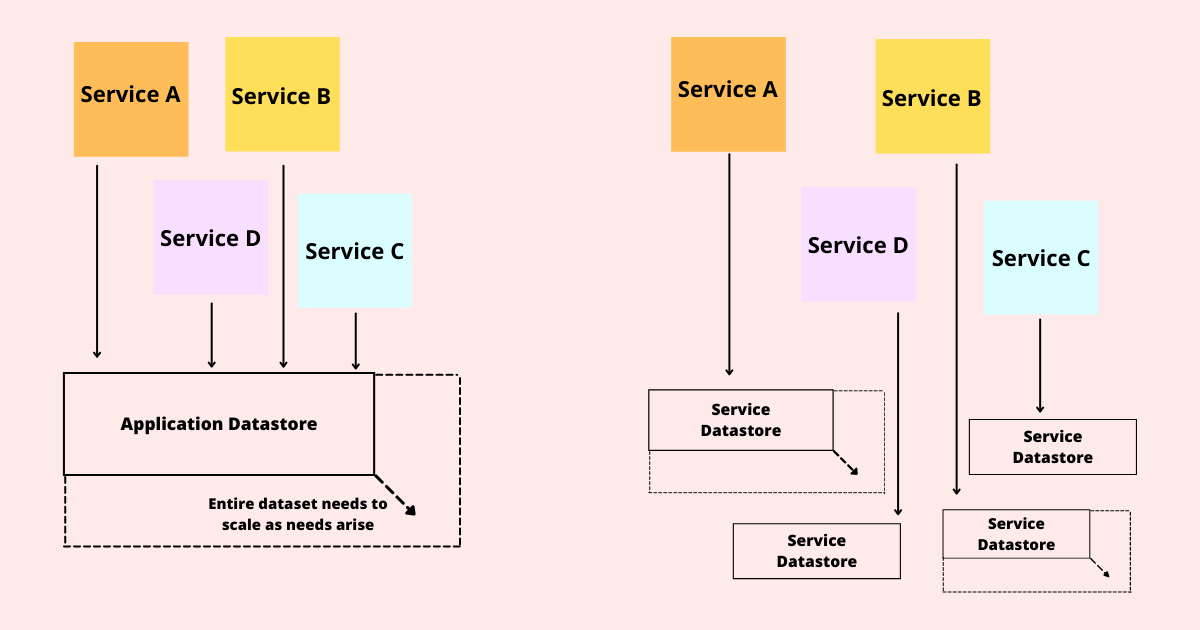

Unless it’s a private cloud, no cloud provider stores just one customer’s data on their servers. This is done to conserve resources and cut costs. Consequently, there is a possibility that a user’s private data is visible or accessible to other users. Cloud service providers should ensure proper data isolation and logical storage segregation to handle such sensitive situations.

The growing use of virtualization in cloud implementation is another security concern. Virtualization changes the relationship between the operating system and the underlying hardware. It adds a layer that needs to be configured, managed, and secured properly.

These were the vulnerabilities in the cloud. Now let’s talk about how we can secure our cloud, starting with security controls:

Cloud Security Controls

An effective cloud security architecture must identify any current or future issues that may arise with security management. It must follow mitigation strategies, procedures, and guidelines to ensure a secure cloud environment. Security controls are used by security management to address these issues.

Let’s look at the categories of controls behind a cloud security architecture:

Deterrent Controls

Deterrents are administrative mechanisms used to ensure compliance with external controls and to reduce attacks on a cloud system. Deterrent controls, like a warning sign on a property, reduce the threat level by informing attackers about negative consequences.

Policies, procedures, standards, guidelines, laws, and regulations that guide an organization toward security are examples of such controls.

Preventive controls

The primary goal of preventive controls is to safeguard the system against incidents by reducing, if not eliminating, vulnerabilities and preventing unauthorized intruders from accessing or entering the system. Examples of these controls are firewall protection, endpoint protection, and multi-factor authentication like software or feature implementations.

Preventive controls also consider room for human error. They use security awareness training and exercise to address these issues at the onset. It also takes into account the strength of authentication in preventing unauthorized access. Preventative controls not only reduce the possibility of loss event occurrence but are also effective enough to eliminate the system’s exposure to malicious actions.

Detective Controls

The purpose of detective controls is to detect and respond appropriately to any incidents that occur. In the event of an attack, a detective control will alert the preventive or corrective controls to deal with the problem. These controls function during and even after an event has taken place.

System and network security monitoring, including intrusion detection and prevention methods, is used to detect threats in cloud systems and the accompanying communications infrastructure.

Most organizations go as far as to acquire or build their security operations center (SOC). A dedicated team monitors the IT infrastructure there. Detective controls also come equipped with physical security controls like intrusion detection and anti-virus/anti-malware tools. This helps in detecting security vulnerabilities in the IT infrastructure.

Corrective Controls

Corrective control is a security incident mitigation control. Technical, physical, and administrative measures are taken during and after an incident to restore the resources to their last working state. For example, re-issuing an access card or repairing damage are considered corrective controls. Corrective controls include: terminating a process and implementing an incident response plan. Ultimately, the corrective controls are all about recovering and repairing damage caused by a security incident or unauthorized activity.

Here are benefits of selecting a cloud storage solution.

Security and Privacy

The protection of data is one of the primary concerns in cloud computing when it comes to security and privacy.

Millions of people have put their sensitive data on these clouds. It is difficult to protect every piece of data. Data security is a critical concern in cloud computing since data is scattered across a variety of storage devices. Computers, including PCs, servers, and mobile devices such as smartphones and wireless sensor networks. If cloud computing security and privacy are disregarded, each user’s private information is at risk. It will be easier for cybercriminals to get into the system and exploit any user’s private storage data.

For this reason, virtual servers, like physical servers, should be safeguarded against data leakage, malware, and exploited vulnerabilities.

Identity Management

Identity Management is used to regulate access to information and computing resources.

Cloud providers can either use federation or SSO technology or a biometric-based identification system to incorporate the customer’s identity management system into their infrastructure. Or they can supply their own identity management system.

CloudID, for example, offers cloud-based and cross-enterprise biometric identification while maintaining privacy. It ties users’ personal information to their biometrics and saves it in an encrypted format.

Physical Security

IT hardware like servers, routers, and cables, etc. are also vulnerable. They should also be physically secured by the cloud service providers to prevent unauthorized access, interference, theft, fires, floods, etc.

This is accomplished by serving cloud applications from data centers that have been professionally specified, designed, built, managed, monitored, and maintained.

Privacy

Sensitive information like card details or addresses should be masked and encrypted with limited access to only a few authorized people. Apart from financial and personal information, digital identities, credentials, and data about customer activity should also be protected.

Penetration Testing

Penetration testing rules of engagement are essential, considering the cloud is shared between customers or tenants. The cloud provider is responsible for cloud security. He should authorize the scanning and penetration testing from inside or outside.

Parting words

It’s easy to see why so many people enjoy using it and are ready to entrust their sensitive data to the cloud. However, a data leak could jeopardize this confidence. As a result, cloud computing security and privacy must build a solid line of protection against these cyber threats.

If you’re overwhelmed with the sheer possibilities of threats in cloud computing or lack the resources to put in place a secure cloud infrastructure, get on a call with us for a quick consultation.