Google Analytics 4 (GA4) is the latest version of Google Analytics, a web analytics service offered by Google that tracks and reports website traffic. It was released in October 2020 and is the successor to the previous version, Universal Analytics.

One of the main differences between GA4 and Universal Analytics is that GA4 is designed to be more closely integrated with other Google products, such as Google Ads, and to make it easier for users to get a complete view of their customers across different devices and channels. GA4 also includes new features such as automatic event tracking and setting up audiences based on user behavior.

Why is GA4 necessary?

Google Analytics 4 (GA4) is a new version of Google Analytics that was designed to better meet the needs of modern businesses and organizations. It includes several new features and capabilities that are not available in earlier versions of the product, such as:

- Enhanced user privacy controls: GA4 includes more robust rules for managing user data and protecting user privacy, including the ability to automatically delete user data after a specified time period.

- Improved data collection and analysis: GA4 uses machine learning algorithms to better understand user behavior and provide more accurate and detailed insights about how users interact with websites and apps.

- Enhanced integration with other Google products: GA4 integrates more seamlessly with other Google products, such as Google Ads and Google BigQuery, making it easier to use data from multiple sources to inform marketing and business strategy.

Overall, GA4 is intended to provide businesses and organizations with a more comprehensive and powerful tool for understanding and engaging with their customers.

Benefits/Advantages of GA4

- Customizable Interface

Google Analytics 4 has a more intuitive and customizable interface than ever before – which addresses some of the previous pain points Google Analytics users have faced.

You can now easily customize the timeframe of your data reports, making the tool more enjoyable to use. Plus, you can access important data with just a quick glance.

- Control Over Custom Reporting

Google Analytics 4 is a powerful tool that focuses on custom reporting. And better yet, it allows you to quickly view any data of your choice and make decisions in an efficient manner!

Universal Analytics was challenging to work with before, but that’s all in the past. You can create custom reports and precise, insightful data visualizations that make it easier to understand your users in more detail.

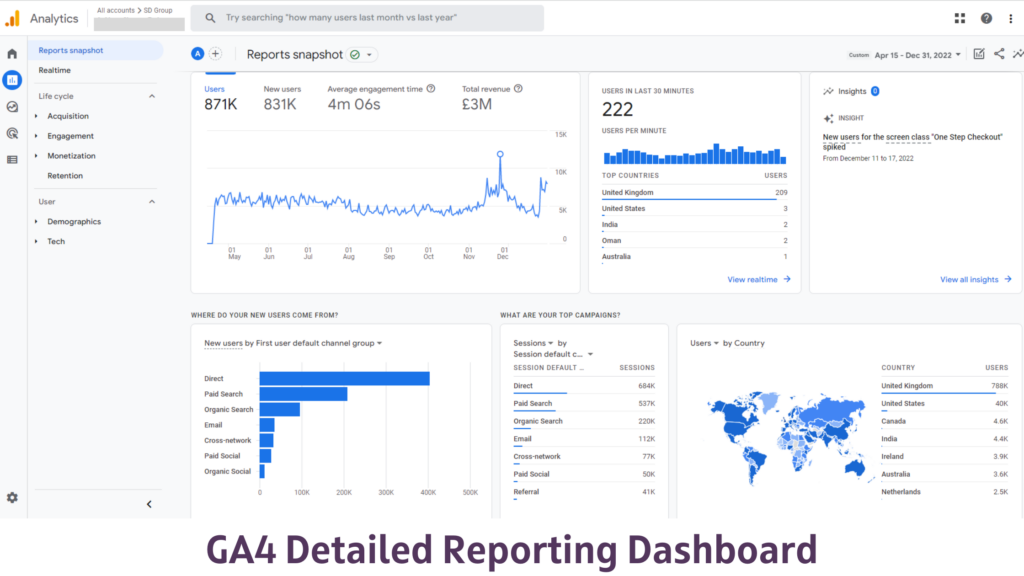

- Detailed Reporting

Google Analytics 4 is also capable of providing more detailed data. Allowing you to see the type of traffic that’s been coming in for the last few days, weeks, or months.

This new data model introduced by Google Analytics 4 is inspiring and has made it easier to work on reports. You can customize your events to fit your brand or business needs. For instance, you might want them to represent a specific user action that you would like to analyze, or perhaps you could use them as a means of differentiating one set of users from another.

- Better Real-Time Data

There are some improvements in Google Analytics 4 that Universal Analytics doesn’t have. For example, it offers real-time reports to see what users do as they interact with your site. You can even see individual user actions!

With DebugView in Google Analytics, 4 allows checking the incoming data at a more granular level.

- New Automatic Tracking

Google Analytics 4 has made some exciting changes, including a more robust and easier-to-use Enhanced Measurement feature. This feature automatically tracks how much time people spend on a page and how many outbound links they click. This is a powerful tool to increase engagement with your website.

Because of this, custom campaign tracking is not needed. And the actions are automatically tracked for you.

- More Effective Data Exporting

You will get much more data with Google Analytics 4 and can export that data more effectively. Having access to more detailed and more personalized data means you’ll be able to get more in-depth data that are tailored specifically for your needs, plus much more data per export.

On top of all this, you could even send your data to Google BigQuery if you want to be in total control of the data you collect.

Conclusion

With real-time insights, machine learning, and cross-device tracking, GA4 offers various advanced features that can help you better understand your customers and optimize your marketing efforts. Plus, with Universal Analytics set to be retired in July 2023, now is the perfect time to make the switch.

Whether you need help with setup, data migration, or ongoing support and training, our team of experts has you covered. Don’t miss out on the benefits of GA4 – contact our certified analytics expert for a free consultation today!